HashiCorp Nomad is a simple and flexible orchestrator used to deploy and manage containers and non-containerized applications across multiple cloud, on-premises, and edge environments. It is widely adopted and used in production by organizations such as [Roblox] and(https://www.hashicorp.com/case-studies/roblox) Q2. We are excited to announce that Nomad 1.8 is now generally available.

Here’s what’s new in Nomad 1.8, Nomad’s first LTS release:

-

exec2task driver (beta) - Consul integration improvements: Consul API gateway, transparent proxy, and admin partitions

- A re-worked jobs page in the web UI with live updates

- Job descriptions in the web UI

- Time-based task execution (Enterprise)

- Support for specifying existing cgroups

- nomad-bench: A benchmarking framework for the core scheduler

- Sentinel policy management in the web UI (Enterprise)

- GitHub Actions for Nomad and Nomad Pack

Nomad Enterprise 1.8 LTS release

We are pleased to announce a Long-Term Support (LTS) release program for HashiCorp Nomad Enterprise, starting with Nomad 1.8. Going forward, the first major release of each calendar year will be an LTS release. LTS releases have the following benefits for Nomad operators:

- Extended maintenance: Two years of critical fixes provided through minor releases

- Efficient upgrades: Support for direct upgrades from one LTS release to the next, reducing major-version upgrade risk and improving operational efficiency

LTS releases reduce operational overhead and risk by letting organizations receive critical fixes in minor releases without having to upgrade their major version more than once a year. Once you’ve upgraded to Nomad Enterprise 1.8, you will be running on a maintained LTS release that will allow you to easily upgrade to the next LTS major release when it's available. For more information, refer to HashiCorp’s multi-product LTS statement.

exec2 task driver (beta)

Nomad has always provided support for heterogeneous workloads. The Nomad exec task driver provided a simple and relatively easy way to run binary workloads in a sandboxed environment. Nomad 1.8 introduces a new exec2 task driver beta release.

Similar to the exec driver, the new exec2 driver is used to execute a command for a task. However, it offers a security model optimized for running “ordinary” processes with very short startup times and minimal overhead in terms of CPU, disk, and memory utilization. The exec2 driver leverages kernel features such as the Landlock LSM, cgroups v2, and the unshare system utility. Tasks are no longer required to use filesystem isolation based on chroot due to these enhancements, which enhances security and improves performance for the Nomad operator.

Below is a task that uses the new exec2 driver, which must be installed on the Nomad client host prior to executing the task:

job "http" {

group "web" {

task "python" {

driver = "exec2"

config {

command = "python3"

args = ["-m", "http.server", "8080", "--directory", "${NOMAD_TASK_DIR}"]

unveil = ["r:/etc/mime.types"]

}

}

}

}Consul integration improvements

HashiCorp Consul is a service networking platform that provides service discovery for workloads, service mesh for secure service-to-service communication, and dynamic application configuration for workloads. Nomad is frequently deployed together with Consul for a variety of large-scale use cases, and has long supported Consul service mesh for securing service-to-service traffic. With Nomad 1.8, we introduce official support for Consul API gateway, transparent proxy on service mesh, and admin partitions for multi-tenancy with Consul Enterprise.

Consul API gateway

Consul API gateway is a dedicated ingress solution for intelligently routing traffic to applications on your Consul service mesh. It provides a consistent method for handling inbound requests to the service mesh from external clients. Consul API gateway routes any API requests from clients across various protocols (TCP/HTTP) to the appropriate backend service and also performs duties such as load balancing to backend service instances, modifying HTTP headers for requests, and splitting traffic between multiple services based on weighted ratios.

Although Consul has supported VM runtimes since Consul 1.15, running API gateway on Nomad and routing traffic to services on Nomad was not officially supported. Nomad 1.8 provides a prescriptive job specification along with a supporting tutorial on how to securely deploy Consul API gateway on Nomad.

Below is an example of running Consul API gateway as a Nomad job when using the provided example job spec. Notably, the example leverages HCL2 variables to specify the latest versions of Consul and Envoy.

nomad job run \

-var="consul_image=hashicorp/consul:1.18.2" \

-var="envoy_image=hashicorp/envoy:1.28.3" \

-var="namespace=consul"

./api-gateway.nomad.hclOnce Consul API gateway is running on Nomad, you can define routes using Consul configuration entries to bind traffic-routing rules for requests to backend services on the mesh. The example below creates a new HTTP routing rule that routes requests with an HTTP prefix path of /hello to a backend service on the mesh named hello-app:

Kind = "http-route"

Name = "my-http-route"

// Rules define how requests will be routed

Rules = [

{

Matches = [

{

Path = {

Match = "prefix"

Value = "/hello"

}

}

]

Services = [

{

Name = "hello-app"

}

]

}

]

Parents = [

{

Kind = "api-gateway"

Name = "my-api-gateway"

SectionName = "my-http-listener"

}

]For more information on using Consul API gateway with Nomad, see the Deploy a Consul API Gateway on Nomad tutorial.

Transparent proxy

Transparent proxy is a key service mesh feature that lets applications communicate through the service mesh without modifying their configurations. It also hardens application security by preventing direct inbound connections to services that bypass the mesh.

Consul service mesh on Nomad has always required addressing upstream services using the explicit address of the local proxy to route traffic to the upstreams service. Nomad 1.8 introduces a more seamless way of dialing upstream services: configuring services to use transparent proxy with a new transparent_proxy block. This simplifies the configuration of Consul service mesh by eliminating the need to configure upstreams blocks in Nomad. Instead, the Envoy proxy will determine its configuration entirely from Consul service intentions:

group "api" {

network {

mode = "bridge"

}

service {

name = "count-api"

port = "9001"

connect {

sidecar_service {

proxy {

transparent_proxy {}

}

}

}

}

task "web" {

driver = "docker"

config {

image = "hashicorpdev/counter-api:v3"

}

}

}When a service is configured to use transparent proxy, workloads can now dial the service using a virtual IP Consul DNS name as shown here:

group "dashboard" {

network {

mode = "bridge"

port "http" {

static = 9002

to = 9002

}

}

service {

name = "count-dashboard"

port = "http"

connect {

sidecar_service {

proxy {

transparent_proxy {}

}

}

}

}

task "dashboard" {

driver = "docker"

env {

COUNTING_SERVICE_URL = "http://count-api.virtual.consul"

}

config {

image = "hashicorpdev/counter-dashboard:v3"

}

}

}Note that in order to use transparent proxy, the consul-cni plugin must be installed on the client host along with the reference CNI plugins at the directory specified by cni_path config for the Nomad client. Nomad will invoke the consul-cni CNI plugin to configure iptables rules in the network namespace to force outbound traffic from an allocation to flow through the proxy.

Admin partitions

Consul supports native multi-tenancy through admin partitions, which was released in Consul 1.11 (Enterprise only). Nomad can now use admin partitions to register services in Consul onto a specific admin partition, or for interacting with the Consul KV API on a specific partition via the template block:

job "docs" {

group "example" {

consul {

cluster = "default"

namespace = "default"

partition = "prod"

}

service {

name = "count-api"

port = "9001"

}

task "web" {

template {

data = "FRONTEND_NAME="

destination = "local/config.txt"

}

}

task "app" {

template {

data = "APP_NAME="

destination = "local/config.txt"

}

}

}

}Re-worked Jobs page in the web UI with live updates

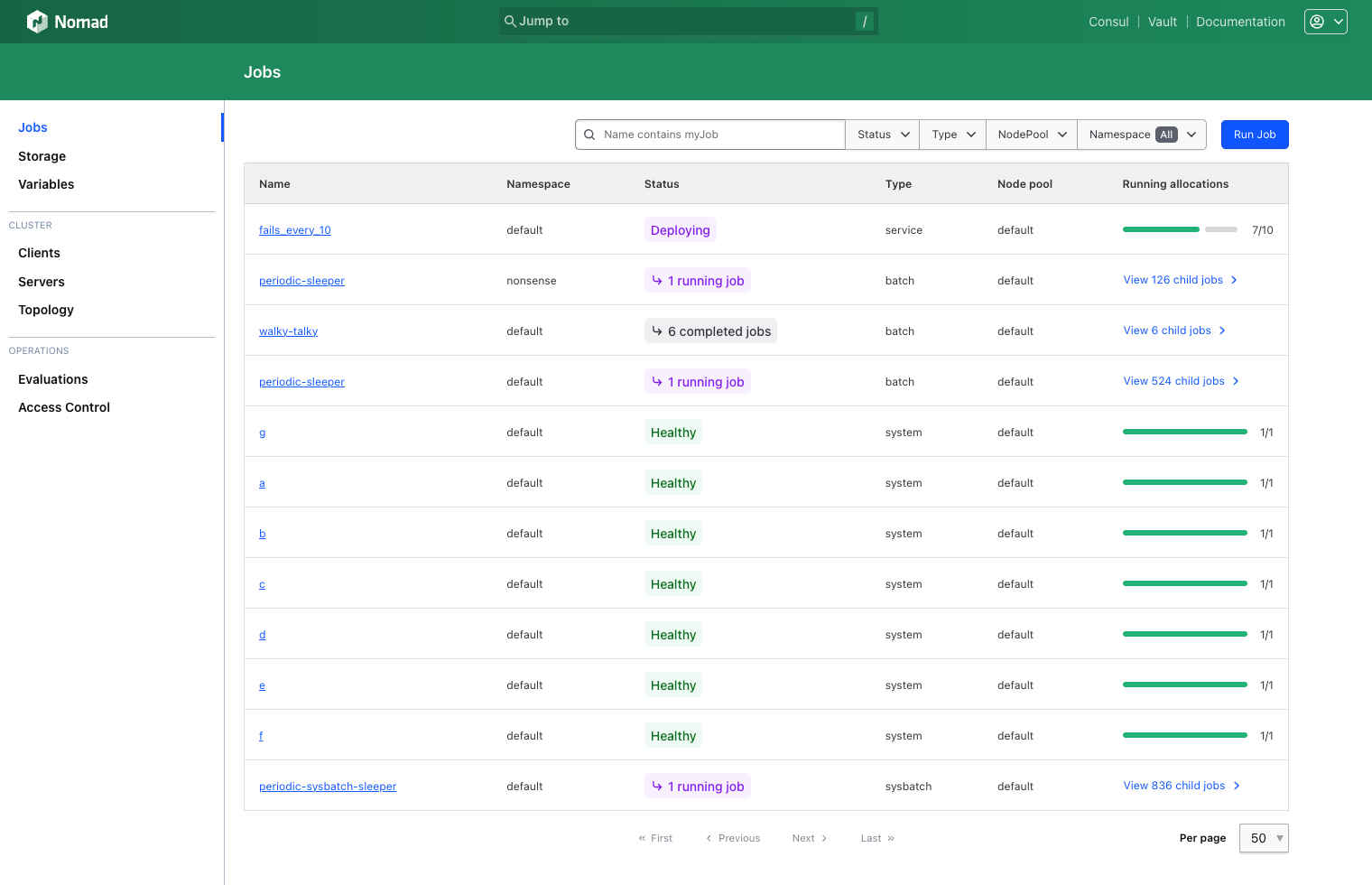

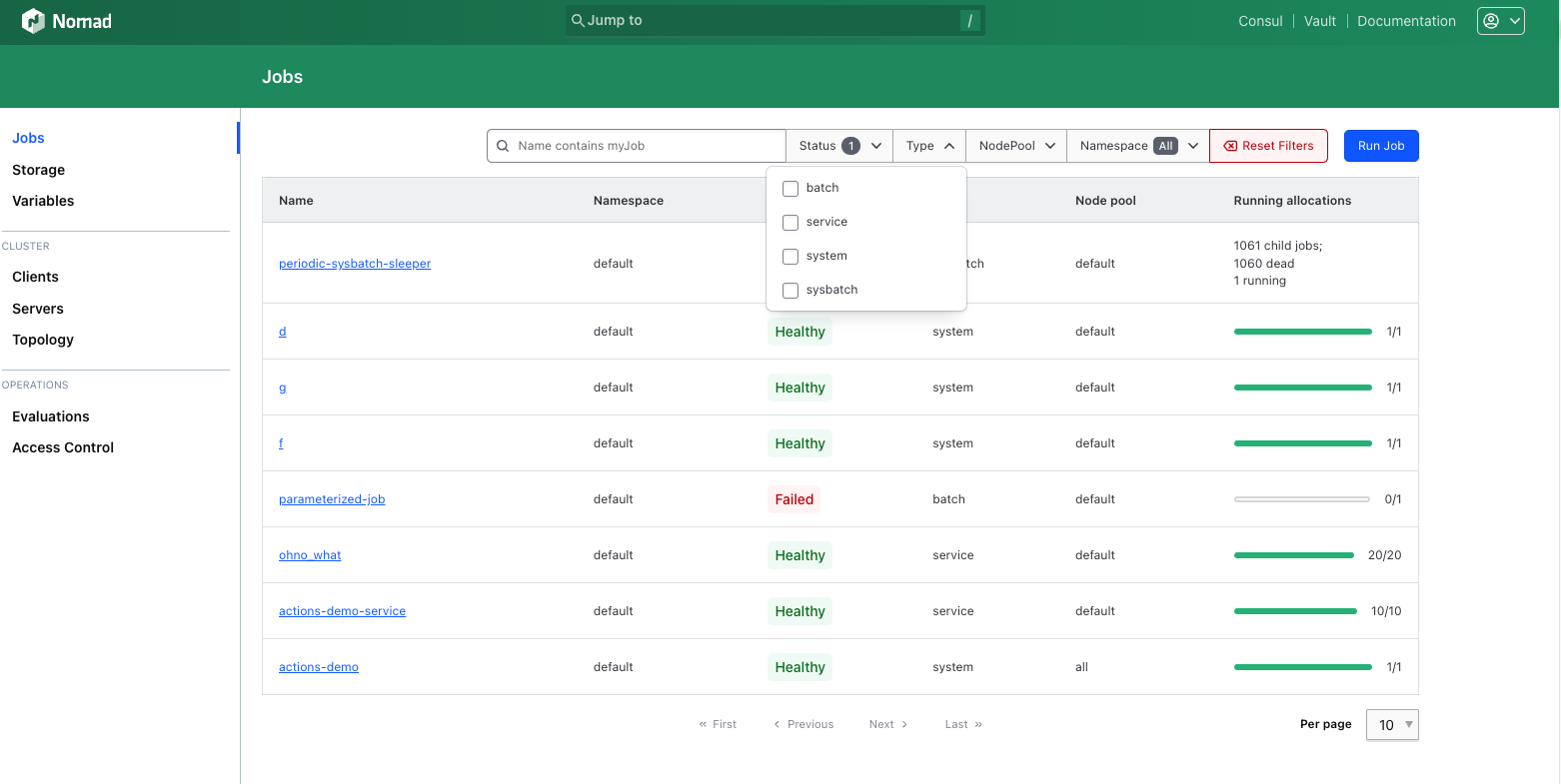

Nomad's UI lets users explore many jobs at a glance, or narrow in on individual jobs to see fine-grained details. Previously, in Nomad 1.6, we re-worked job detail pages to give users live updates during deployments or when job allocations change state. In Nomad 1.8, we're bringing these themes to the jobs index page in three major areas:

- We've prioritized showing the current state over historical state for jobs. Previously, long-garbage-collected allocation placement failures, lost nodes, and other historical representations of job state were shown alongside currently running allocations. In self-healing jobs, this often meant that a healthy job looked like it had failures, when in fact Nomad had replaced or rescheduled those failures. Now, the jobs list tries to show accurate allocation information and meaningful statuses (like "Degraded", or "Recovering") to better illustrate the current state of a job.

- We've added server-side pagination. For users with many thousands of jobs, the main landing page for the web UI will no longer have a noticeable delay on rendering. Users can control page length and filter paginated jobs appropriately.

- We've added live updating. Previously, users had to manually refresh the page to see newly created jobs, or to hide jobs that had been stopped and garbage-collected. Now, the jobs list will update in real-time as jobs are created, updated, or removed. Further, any changes to allocations of jobs on-page will be reflected in the status of that job in real-time.

Additionally, we've made some enhancements to searching and filtering on the jobs index page. In addition to filters for Status, Type, Node Pool, and Namespace, the UI now allows for filter expressions to refine the list at a granular level.

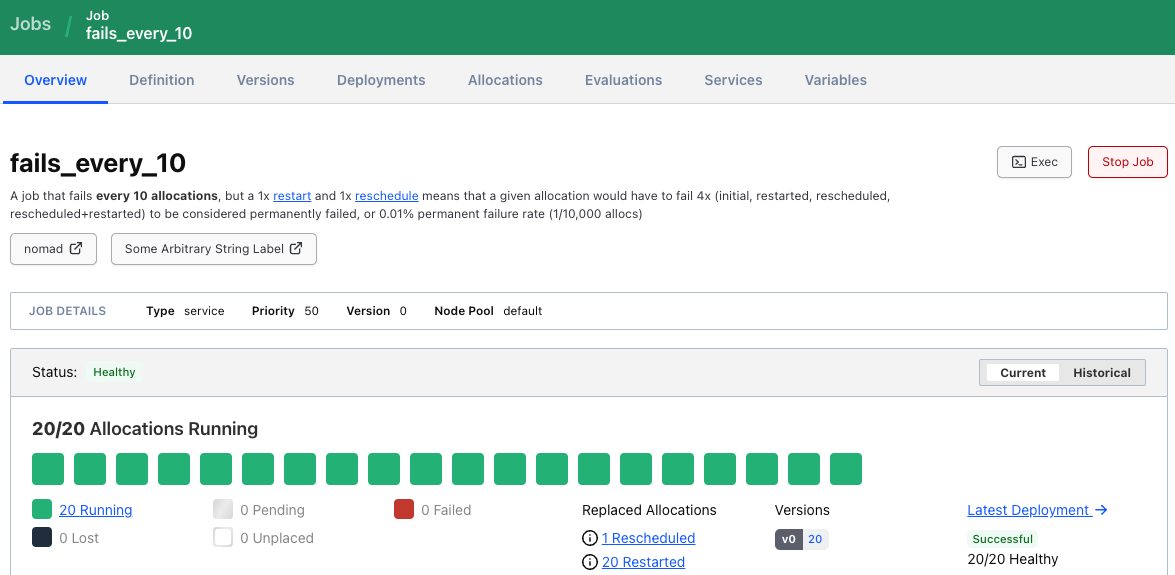

Job descriptions in the web UI

Nomad 1.8 includes enhancements for annotating job specifications, allowing job authors to easily convey additional information that may be helpful for themselves and others during regular operation. Below is an example of a job specification with a ui job block and its rendered view within the Nomad UI:

job "fails_every_10" {

ui {

description = "A job that fails **every 10 allocations**, but a 1x [restart](https://nomadproject.io) and 1x reschedule means that a given allocation would have to fail 4x (initial, restarted, rescheduled, rescheduled+restarted) to be considered permanently failed, or 0.01% permanent failure rate (1/10,000 allocs)"'

link {

label = "nomad"

url = "https://nomadproject.io"

}

link {

label = "Some Arbitrary String Label"

url = "http://hashicorp.com/"

}

}

Time-based task execution (Enterprise)

Nomad 1.8 introduces a new way to provide governance for tasks executed from jobs through time-based execution. With a CRON-like syntax, operators can now schedule when tasks should execute and when they should stop. This reduces the operational risk and complexity for Nomad operators in situations with constraints for running workloads during certain business hours. When using time-based task execution, operators can easily opt-in or opt-out of a schedule that governs when the task can run through the web based UI. This easily allows operators to manage the execution of time sensitive workloads such as High Frequency Trading (HFT) or High Performance Compute (HPC) workloads.

job "example-task-with-schedule" {

type = "service"

...

// scheduled task

task "business" {

schedule {

cron {

// start is a full cron expression, except:

// * it must have 6 fields (no seconds in front)

// * it may not contain "/" or ","

// start 09:30 AM on weekdays:

start = "30 9 * * MON-FRI *"

// end is a partial cron expression with only minute & hour.

// end at 16:00 after the start time on the same day:

end = "0 16"

// timezone is any available in the system's tzdb

timezone = "America/New_York"

}

}

driver = "docker"

config {

image = "python:slim"

command = "python3"

args = ["./local/trap.py"]

}

template {

destination = "local/trap.py"

data = file("trap.py") # apps should handle the kill_signal

}

}

}

}Support for specifying existing cgroups

cgroups provides resource isolation on Linux for processes. Although Nomad currently provides the ability to manage cgroups for certain task drivers, Nomad makes opinionated decisions regarding cpusets, memory limits, and cpu limits. HFT and HPC workloads may leverage custom cgroups configured on the host operating system, which are managed externally from Nomad. With this new capability, custom cgroups v2 and cgroups v1 are specified with a new cgroup_v2_override or cgroup_v1_override field in the raw_exec task driver’s config block. When using cgroups, no resource limits (cores, cpu, memory) are enforced:

job "example" {

type = "service"

group "example_group" {

task "example_task" {

driver = "raw_exec"

config {

command = "/bin/bash"

args = ["-c", "sleep 9000"]

cgroup_v2_override = "custom.slice/app.scope"

}

resources {

cpu = 1024

memory = 512

}

}

}

}nomad-bench: Benchmarking framework for our core scheduler

nomad-bench is a testing framework that provisions infrastructure used to run tests and benchmarks against Nomad test clusters. The infrastructure consists of Nomad test clusters that each have a set of servers with hundreds or thousands of simulated nodes created using nomad-nodesim.

The Nomad server processes are not simulated and are expected to run on their own hosts, mimicking real-world deployments. Metrics are then gathered from the Nomad servers for use with benchmarking and load testing. We hope to share more details in later blogs and also document learnings from our benchmarking efforts to guide requirements and sizing recommendations for production deployments.

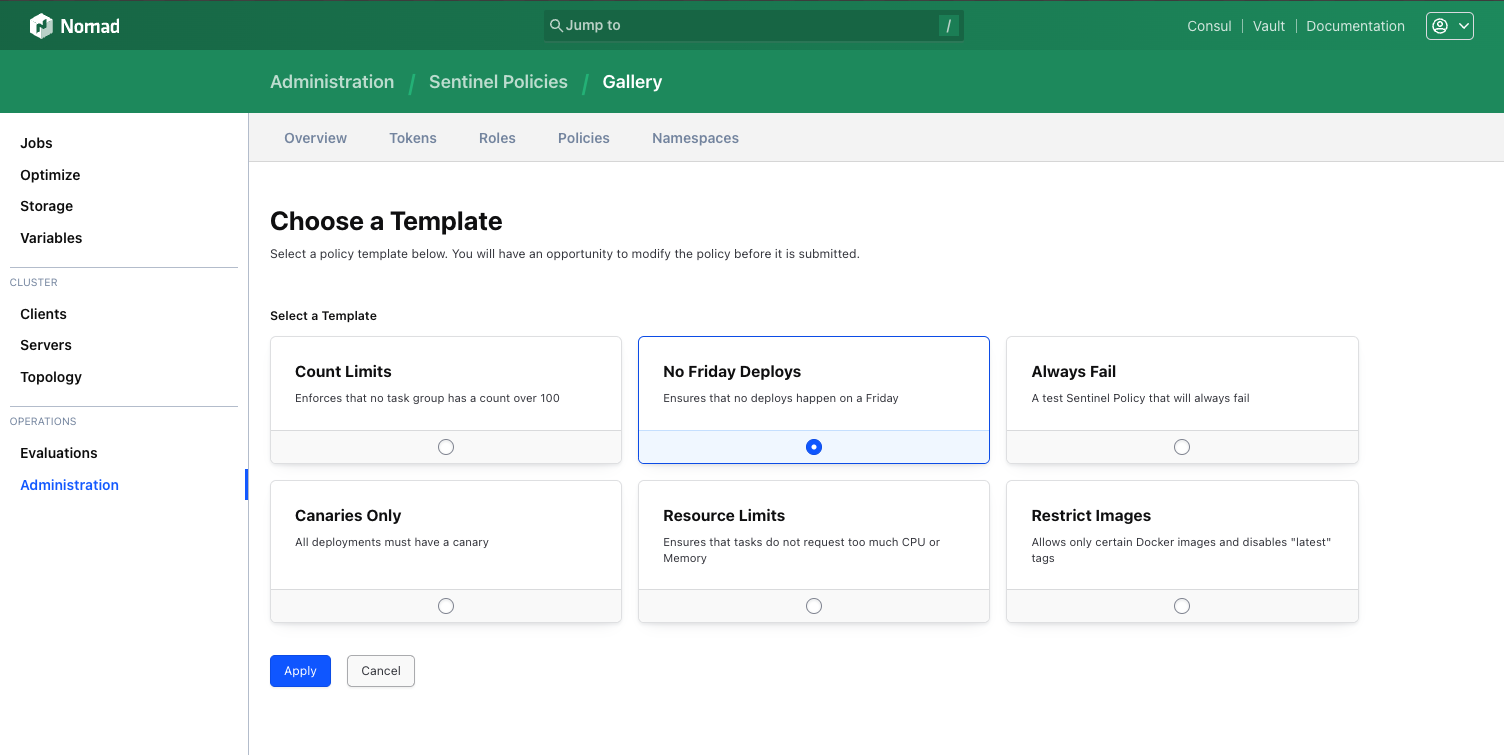

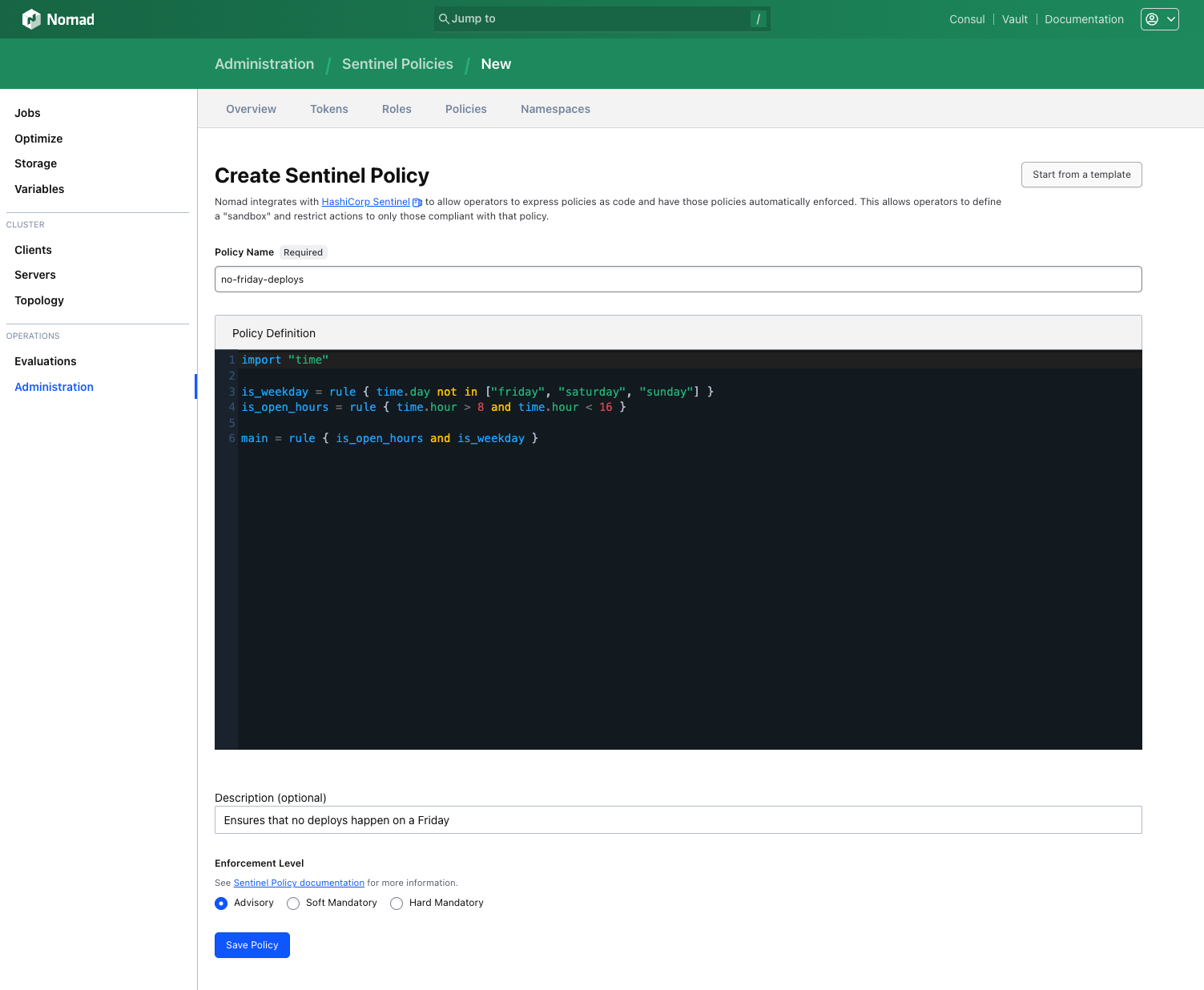

Sentinel policy management in the web UI

Nomad Enterprise integrates with HashiCorp Sentinel for fine-grained policy enforcement. Sentinel allows operators to express their policies as code and have them automatically enforced. This allows operators to define a "sandbox" and restrict actions to only those compliant with policy. Nomad 1.8 now allows Enterprise users to manage Sentinel policies directly within the Nomad UI. Users can create a Sentinel policy based on a template provided from the UI or create policies directly using an editor for Sentinel policies.

The standard templates in the UI include common building-block policies like time-based enforcement (e.g. no Friday deployments), and image-based enforcement (e.g. only operator-specified images may be used in job specifications):

After selecting a policy template to use, users can then edit the policy directly within the Nomad UI with the Sentinel policy editor.

GitHub Actions for Nomad and Nomad Pack

In addition to the release of Nomad 1.8, we’re pleased to announce the availability of two GitHub Actions — setup-nomad and setup-nomad-pack — which provide an easy way to leverage the nomad and nomad-pack binaries as part of your GitHub Actions pipeline. Below is an example of how to leverage the nomad binary for setting up Nomad jobs within the same repository. Refer to setup-nomad#Usage and setup-nomad-pack#Usage for further details.

name: nomad

on:

push:

env:

PRODUCT_VERSION: "1.8.0"

NOMAD_ADDR: "$"

jobs:

nomad:

runs-on: ubuntu-latest

name: Run Nomad

steps:

- name: Print `env`

run: env

# see https://github.com/actions/checkout

- name: Checkout

uses: actions/checkout@v4

# see https://github.com/hashicorp/setup-nomad

- name: Setup `nomad`

uses: hashicorp/setup-nomad@v1.0.0

with:

version: "$"

- name: Run `nomad version`

run: "nomad version"

- name: Validate Nomad Job

run: "nomad job validate http.nomad.hcl"

- name: Run Nomad Job

run: "nomad run http.nomad.hcl"Deprecations

Nomad 1.8 officially deprecates the LXC task driver and ECS task driver. Archival of both projects and removal of support is scheduled for Nomad 1.9. Both plug-ins are maintained separately from the Nomad Core project and are not subject to the LTS program.

Getting started with Nomad 1.8

Nomad 1.8 adds a variety of new features and enhancements. We encourage you to try them out:

- Download Nomad 1.8 from the project website.

- Learn more about Nomad with tutorials on the HashiCorp Developer site.

- Contribute to Nomad by submitting a pull request for a GitHub issue with the “help wanted” or “good first issue” label.

- Participate in our community forums, office hours, and other events.

from HashiCorp Blog https://ift.tt/I9S8WRu

via IFTTT

No comments:

Post a Comment