As artificial intelligence (AI) has erupted, Secure by Design for AI has emerged as a critical paradigm. AI is integrating into every aspect of our lives — from healthcare and finance to developers to autonomous vehicles and smart cities — and its integration into critical infrastructure has necessitated that we move quickly to understand and combat threats.

Necessity of Secure by Design for AI

AI’s rapid integration into critical infrastructure has accelerated the need to understand and combat potential threats. Security measures must be embedded into AI products from the beginning and evolve as the model evolves. This proactive approach ensures that AI systems are resilient against emerging threats and can adapt to new challenges as they arise. In this article, we will explore two polarizing examples — the developer industry and the healthcare industry.

Complexities of threat modeling in AI

AI brings forth new challenges and conundrums when working on an accurate threat model. Before reaching a state in which the data has simple edit and validation checks that can be programmed systematically, AI validation checks need to learn with the system and focus on data manipulation, corruption, and extraction.

- Data poisoning: Data poisoning is a significant risk in AI, where the integrity of the data used by the system can be compromised. This can happen intentionally or unintentionally and can lead to severe consequences. For example, bias and discrimination in AI systems have already led to issues, such as the wrongful arrest of a man in Detroit due to a false facial recognition match. Such incidents highlight the importance of unbiased models and diverse data sets. Testing for bias and involving a diverse workforce in the development process are critical steps in mitigating these risks.

In healthcare, for example, bias may be simpler to detect. You can examine data fields based on areas such as gender, race, etc.

In development tools, bias is less clear-cut. Bias could result from the underrepresentation of certain development languages, such as Clojure. Bias may even result from code samples based on regional differences in coding preferences and teachings. In developer tools, you likely won’t have the information available to detect this bias. IP addresses may give you information about where a person is living currently, but not about where they grew up or learned to code. Therefore, detecting bias will be more difficult.

- Data manipulation: Attackers can manipulate data sets with malicious intent, altering how AI systems behave.

- Privacy violations: Without proper data controls, personal or sensitive information could unintentionally be introduced into the system, potentially leading to privacy violations. Establishing strong data management practices to prevent such scenarios is crucial.

- Evasion and abuse: Malicious actors may attempt to alter inputs to manipulate how an AI system responds, thereby compromising its integrity. There’s also the potential for AI systems to be abused in ways developers did not anticipate. For example, AI-driven impersonation scams have led to significant financial losses, such as the case where an employee transferred $26 million to scammers impersonating the company’s CFO.

These examples underscore the need for controls at various points in the AI data lifecycle to identify and mitigate “bad data” and ensure the security and reliability of AI systems.

Key areas for implementing Secure by Design in AI

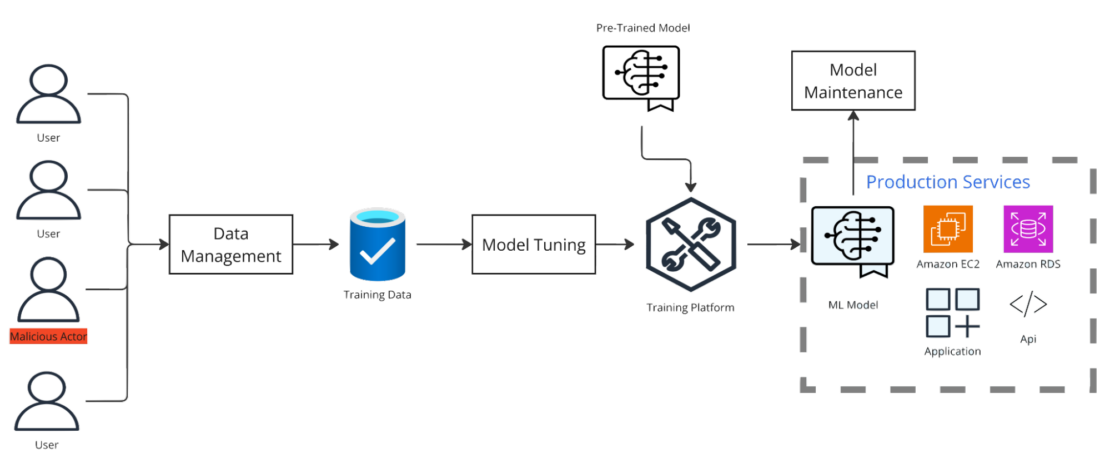

To effectively secure AI systems, implementing controls in three major areas is essential (Figure 1):

1. Data management

The key to data management is to understand what data needs to be collected to train the model, to identify the sensitive data fields, and to prevent the collection of unnecessary data. Data management also involves ensuring you have the correct checks and balances to prevent the collection of unneeded data or bad data.

In healthcare, sensitive data fields are easy to identify. Doctors offices often collect national identifiers, such as drivers licenses, passports, and social security numbers. They also collect date of birth, race, and many other sensitive data fields. If the tool is aimed at helping doctors identify potential conditions faster based on symptoms, you would need anonymized data but would still need to collect certain factors such as age and race. You would not need to collect national identifiers.

In developer tools, sensitive data may not be as clearly defined. For example, an environment variable may be used to pass secrets or pass confidential information, such as the image name from the developer to the AI tool. There may be secrets in fields you would not suspect. Data management in this scenario involves blocking the collection of fields where sensitive data could exist and/or ensuring there are mechanisms to scrub sensitive data built into the tool so that data does not make it to the model.

Data management should include the following:

- Implementing checks for unexpected data: In healthcare, this process may involve “allow” lists for certain data fields to prevent collecting irrelevant or harmful information. In developer tools, it’s about ensuring the model isn’t trained on malicious code, such as unsanitized inputs that could introduce vulnerabilities.

- Evaluating the legitimacy of users and their activities: In healthcare tools, this step could mean verifying that users are licensed professionals, while in developer tools, it might involve detecting and mitigating the impact of bot accounts or spam users.

- Continuous data auditing: This process ensures that unexpected data is not collected and that the data checks are updated as needed.

2. Alerting and monitoring

With AI, alerting and monitoring is imperative to ensuring the health of the data model. Controls must be both adaptive and configurable to detect anomalous and malicious activities. As AI systems grow and adapt, so too must the controls. Establish thresholds for data, automate adjustments where possible, and conduct manual reviews where necessary.

In a healthcare AI tool, you might set a threshold before new data is surfaced to ensure its accuracy. For example, if patients begin reporting a new symptom that is believed to be associated with diabetes, you may not report this to doctors until it is reported by a certain percentage (15%) of total patients.

In a developer tool, this might involve determining when new code should be incorporated into the model as a prompt for other users. The model would need to be able to log and analyze user queries and feedback, track unhandled or poorly handled requests, and detect new patterns in usage. Data should be analyzed for high frequencies of unhandled prompts, and alerts should be generated to ensure that additional data sets are reviewed and added to the model.

3. Model tuning and maintenance

Producers of AI tools should regularly review and adjust AI models to ensure they remain secure. This includes monitoring for unexpected data, adjusting algorithms as needed, and ensuring that sensitive data is scrubbed or redacted appropriately.

For healthcare, model tuning may be more intensive. Results may be compared to published medical studies to ensure that patient conditions are in line with other baselines established across the world. Audits should also be conducted to ensure that doctors with reported malpractice claims or doctors whose medical license has been revoked are scrubbed from the system to ensure that potentially compromised data sets are not influencing the model.

In a developer tool, model tuning will look very different. You may look at hyperparameter optimization using techniques such as grid search, random search, and Bayesian search. You may study subsets of data; for example, you may perform regular reviews of the most recent data looking for new programming languages, frameworks, or coding practices.

Model tuning and maintenance should include the following:

- Perform data audits to ensure data integrity and that unnecessary data is not inadvertently being collected.

- Review whether “allow” lists and “deny” lists need to be updated.

- Regularly audit and monitor alerts for algorithms to determine if adjustments need to be made; consider the population of your user base and how the model is being trained when adjusting these parameters.

- Ensure you have the controls in place to isolate data sets for removal if a source has become compromised; consider unique identifiers that allow you to identify a source without providing unnecessary sensitive data.

- Regularly back up data models so you can return to a previous version without heavy loss of data if the source becomes compromised.

AI security begins with design

Security must be a foundational aspect of AI development, not an afterthought. By identifying data fields upfront, conducting thorough AI threat modeling, implementing robust data management controls, and continuously tuning and maintaining models, organizations can build AI systems that are secure by design.

This approach protects against potential threats and ensures that AI systems remain reliable, trustworthy, and compliant with regulatory requirements as they evolve alongside their user base.

Learn more

- Read our Security series.

- Subscribe to the Docker Newsletter.

- Get the latest release of Docker Desktop.

- Vote on what’s next! Check out our public roadmap.

- Have questions? The Docker community is here to help.

- New to Docker? Get started.

from Docker https://ift.tt/jbaQHRh

via IFTTT

No comments:

Post a Comment