HashiCorp Nomad is a simple and flexible orchestrator used to deploy and manage containers and non-containerized applications across multiple cloud, on-premises, and edge environments. It is widely adopted and used in production by organizations such as BT Group and Epic Games. Today, we are excited to announce that Nomad 1.9 is now generally available.

Here’s what’s new in Nomad 1.9:

- Updated NVIDIA device driver for Multi-Instance GPU support

- Quotas for device resources (Enterprise)

- NUMA awareness for device resources (Enterprise)

- exec2 task driver general availability

- Golden job versions

- libvirt task driver beta

- Improved IPv6 support

Updated NVIDIA device driver for Multi-Instance GPU support

Nomad has supported the NVIDIA device driver for several years and we are excited to add support for Multi-Instance GPU (MIG). This will enhance Nomad’s ability to schedule workloads across your NVIDIA hardware and make full use of your GPU investment.

The NVIDIA device plugin uses NVML bindings to get data regarding available NVIDIA devices and will expose them via Fingerprint RPC. GPUs can be excluded from fingerprinting by setting the ignored_gpu_ids field (see below).

The plugin now detects whether the GPU has MIG enabled. When enabled, all instances will be fingerprinted as individual GPUs that can be addressed accordingly.

The plugin is configured in the Nomad client's plugin block:

plugin "nvidia" {

config {

ignored_gpu_ids = ["uuid1", "uuid2"]

fingerprint_period = "5s"

}

}Quotas for device resources (Enterprise)

Nomad has supported resource quotas since version 0.7 as a mechanism to let users specify CPU, memory, or network resource limits for their tasks. Quotas are namespace- and region-scoped, and are an Enterprise-only feature. We are excited to extend the quotas to allow limiting device resources.

The quota is applied to a region and the quota limit now supports a device block.

name = "default-quota"

description = "Limit the shared default namespace"

# Create a limit for the global region. Additional limits may

# be specified in-order to limit other regions.

limit {

region = "global"

region_limit {

cores = 0

cpu = 2500

memory = 1000

memory_max = 1000

device "nvidia/gpu/1080ti" {

count = 1

}

}

variables_limit = 1000

}NUMA awareness for device resources (Enterprise)

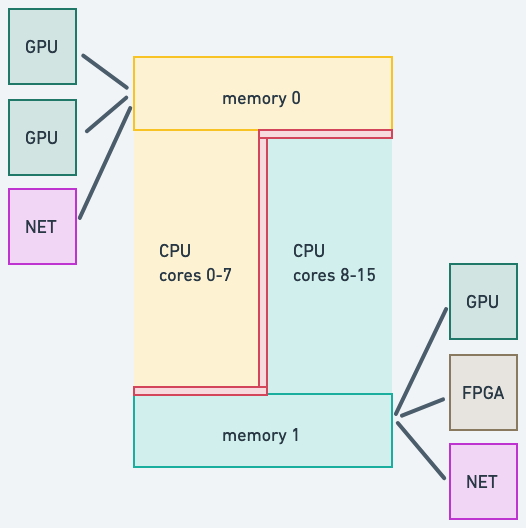

Introduced in Nomad 1.7, non-uniform memory access (NUMA) allowed for multi-core, latency-sensitive workloads to be scheduled by Nomad Enterprise. The NUMA-aware scheduling can greatly increase the performance of your Nomad tasks (For more information, see the Nomad CPU concepts documentation).

Nomad is able to correlate CPU cores with memory nodes and assign tasks to run on specific CPU cores so as to minimize any cross-memory node access patterns. With Nomad 1.9, we are expanding this functionality to also correlate devices to memory nodes and enable NUMA-aware scheduling to take device associativity into account when making scheduling decisions.

This jobspec block has been expanded to support listing a set of devices that must be scheduled with NUMA awareness.

resources {

cores = 8

memory = 16384

device "nvidia/gpu/H100" { count = 2 }

device "intel/net/XXVDA2" { count = 1 }

device "xilinx/fpga/X7" { count = 1 }

numa {

affinity = "require"

devices = [

"nvidia/gpu/H100",

"intel/net/XXVDA2"

]

}

}

exec2 task driver (GA)

Nomad has always provided support for heterogeneous workloads. The first Nomad exec task driver provided a simple and relatively easy way to run binary workloads in a sandboxed environment. Then Nomad 1.8 introduced a new exec2 task driver in beta. Today, the exec2 task driver becomes generally available with full support in Nomad 1.9.

Similar to the exec driver, the new exec2 driver is used to execute a command for a task. However, it offers a security model optimized for running “ordinary” processes with very short startup times and minimal overhead in terms of CPU, disk, and memory utilization. The exec2 driver leverages kernel features such as the Landlock LSM, cgroups v2, and the unshare system utility. With the exec2 driver, tasks are no longer required to use filesystem isolation based on chroot, which enhances security and improves performance for the Nomad operator.

Below is a task that uses the new exec2 driver. It must be installed on the Nomad client host prior to executing the task:

job "http" {

group "web" {

task "python" {

driver = "exec2"

config {

command = "python3"

args = ["-m", "http.server", "8080", "--directory", "${NOMAD_TASK_DIR}"]

unveil = ["r:/etc/mime.types"]

}

}

}

}Golden job versions

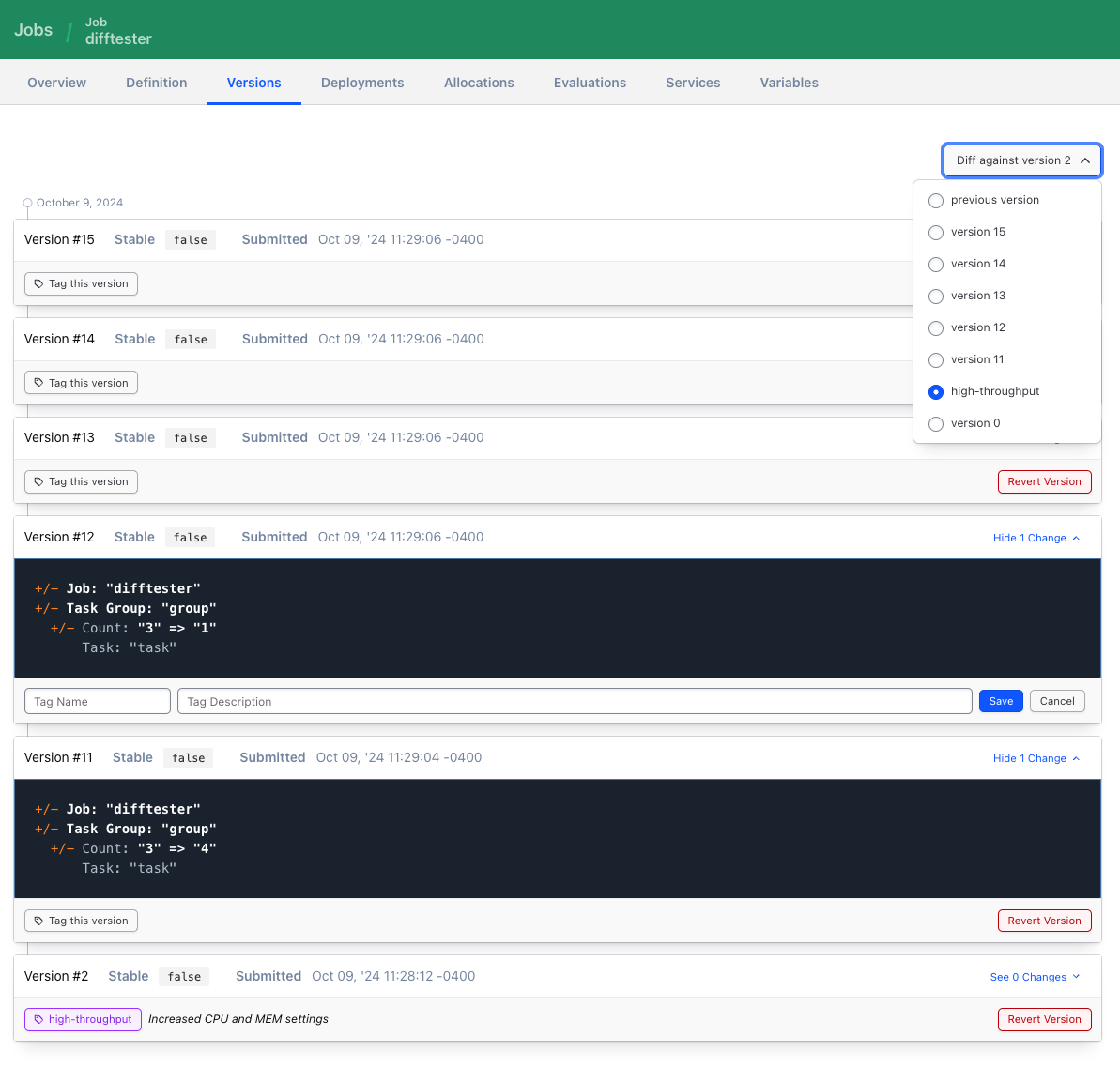

Nomad 1.9 introduces a way to preserve and compare historical versions of a job. Prior to this, any change to a job would push older versions into garbage collection. Now, users can add a tag to versions of their jobs to prevent this. These tags also allow job versions to be compared by tag name, letting users see differences that may have taken place over time.

We’ve added new CLI commands nomad job tag apply and nomad job tag unset to add and remove VersionTags from job versions. They’re used like this:

nomad job tag apply -name="high-throughput" -description="Increased CPU and MEM settings" $jobIDnomad job tag unset -name="high-throughput" $jobIDWe’ve also added -diff-version and -diff-name params to our nomad job revert command, letting users roll back a specified version:

nomad job revert difftester "high-throughput"These same params have been added to the nomad job history command, making it possible to compare multiple versions to a version other than their predecessor.

nomad job history -p -diff-tag=$tagName $jobIDThese CLI updates also have equivalent HTTP API endpoint changes and are reflected in the web UI.

We hope this feature gives users more confidence about prospective changes to a job and lets them observe the amount of “drift” that takes place among their long-running jobs.

Virt task driver (beta)

Nomad 1.9 introduces a new task driver for managing virtual machines based on the libvirt API, an open source API, daemon, and management tool for managing platform virtualization. It can be used to manage KVM, Xen, VMware ESXi, QEMU, and other virtualization technologies. This new capability expands Nomad’s ability to run any workload anywhere, including virtual machines.

The new Virt task driver offers the following capabilities:

- Start and stop a virtual machine and run a process on it

- Assign a workload identity to the running task inside the virtual machine

- Pass configuration values from Vault or other services using consul-template

- Mount host directories into virtual machines to procure the allocation and task directories inside them

- Configure port forwarding from the Nomad node to the virtual machine

- Access the running task through the task API

This new task driver is in beta and we are actively seeking feedback. We’d like to hear more about your use cases.

Improved IPv6 support

Improvements have been made to support Nomad’s IPv6 capabilities. We have tested and resolved some known issues with server-to-server and server-to-client communications. Additionally, we have tested the Nomad CLI and UI service integrations with Consul and Vault, workload identity, service registration, host networking, bridge networking, and the Docker driver. More work will likely need to be done with IPv6 support as we explore integrations with Consul service mesh.

Deprecations

Nomad 1.9 deprecates the following:

- Nomad has removed support for HCL1 job specifications and the

-hcl1flag onnomad job runand other commands. Refer to GH-20195 for more details. - Nomad has removed the

tls_prefer_server_cipher_suitesagent configuration from the TLS block. - Nomad has removed support for Nomad client agents older than 1.6.0. Older nodes will fail heartbeats. Nomad servers will mark the workloads on Nomad client agents older than 1.6.0 as lost and reschedule them normally according to the job's reschedule block.

- The LXC task driver and ECS task driver projects have been archived with the release of Nomad 1.9 and are no longer supported. Both plug-ins are maintained separately from the Nomad core project and are not subject to the LTS program.

Getting started with Nomad 1.9

Nomad 1.9 adds a variety of new features and enhancements. We encourage you to try them out:

- Download Nomad 1.9 from the project website.

- Learn more about Nomad with tutorials on the HashiCorp Developer site.

- Contribute to Nomad by submitting a pull request for a GitHub issue with the “help wanted” or “good first issue” label.

- Participate in our community forums, office hours, and other events.

- To learn more about how Nomad can solve your business-critical workload orchestration needs, talk to our sales team and solutions engineers about test-driving Nomad Enterprise.

from HashiCorp Blog https://ift.tt/gGPMcBv

via IFTTT

No comments:

Post a Comment